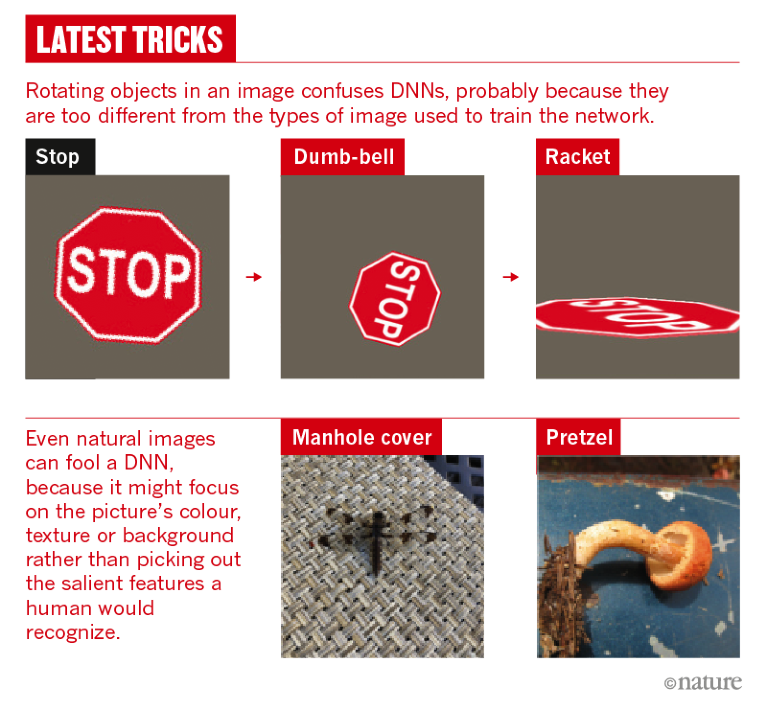

computer vision - How is it possible that deep neural networks are so easily fooled? - Artificial Intelligence Stack Exchange

computer vision - How is it possible that deep neural networks are so easily fooled? - Artificial Intelligence Stack Exchange

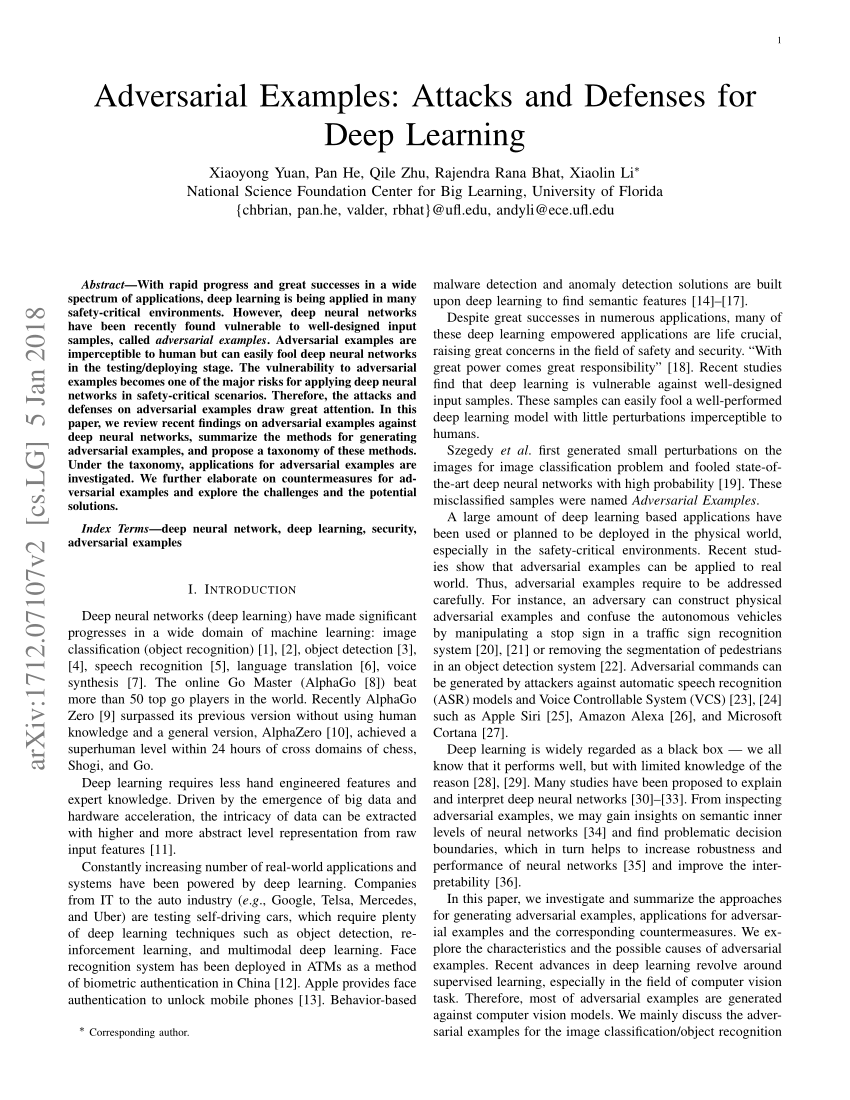

Improving the robustness and accuracy of biomedical language models through adversarial training - ScienceDirect

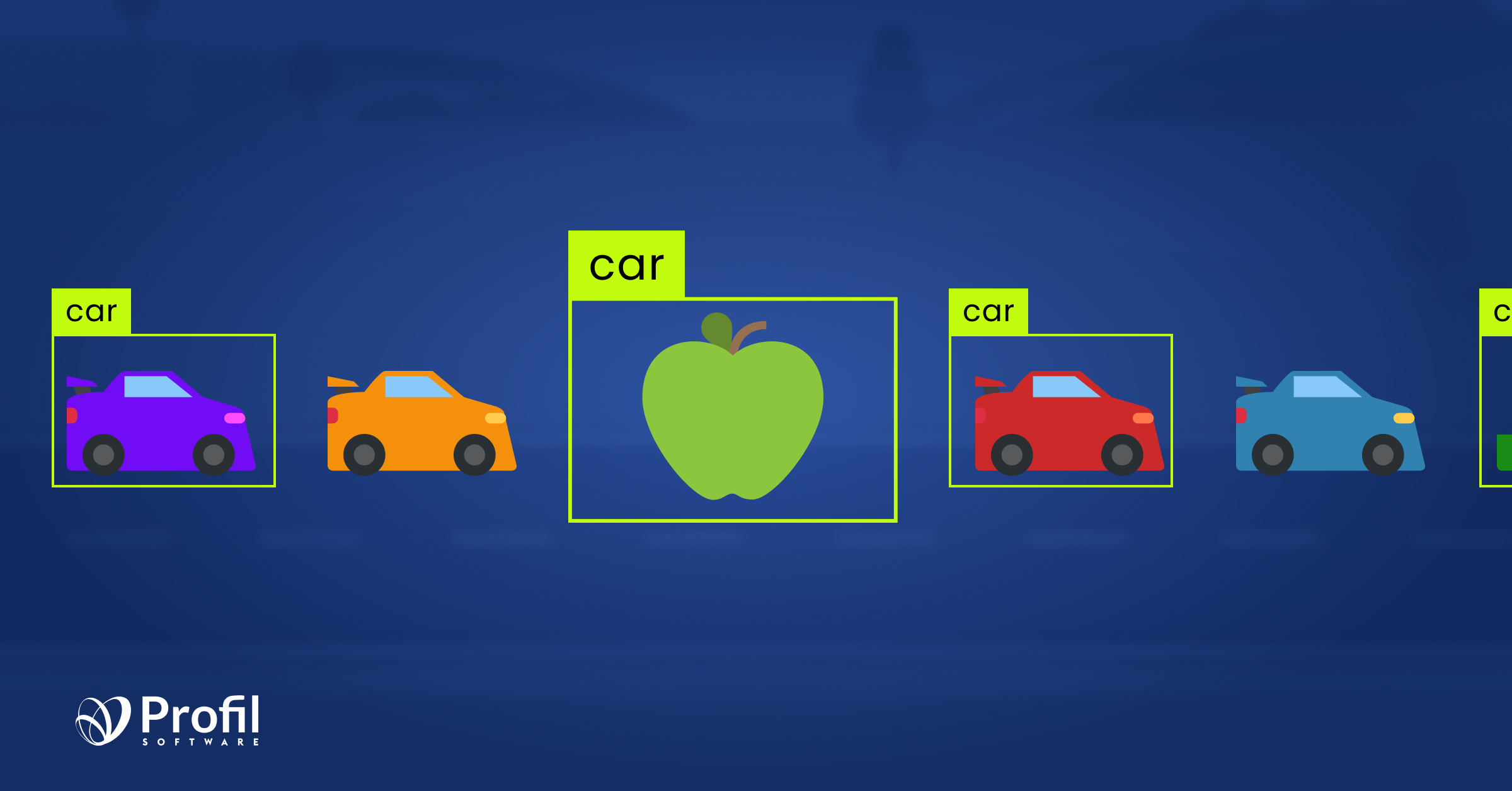

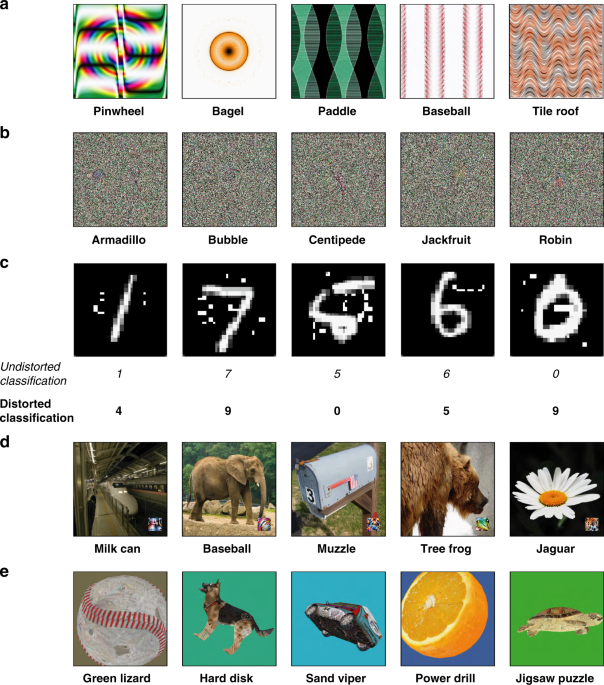

Diagram showing image classification of real images (left) and fooling... | Download Scientific Diagram

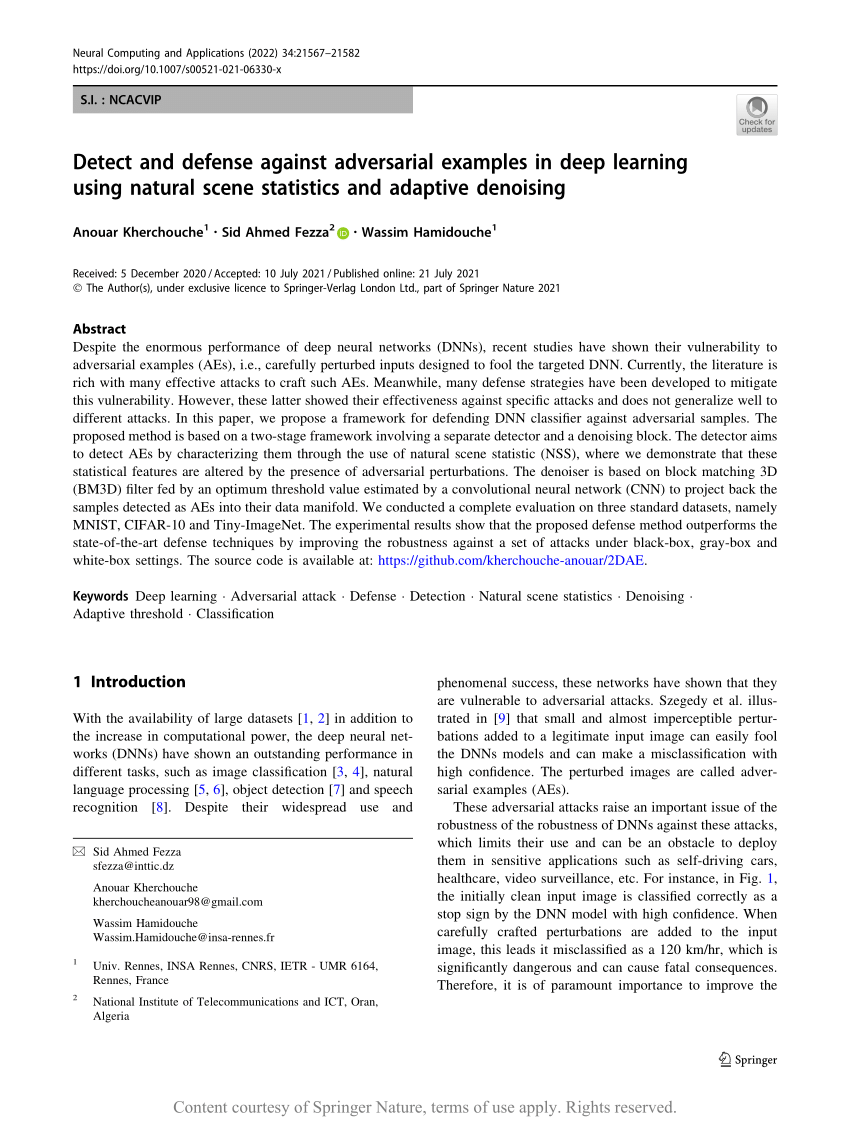

Detect and defense against adversarial examples in deep learning using natural scene statistics and adaptive denoising | Request PDF

3 practical examples for tricking Neural Networks using GA and FGSM | Blog - Profil Software, Python Software House With Heart and Soul, Poland

Towards Faithful Explanations for Text Classification with Robustness Improvement and Explanation Guided Training - ACL Anthology

![PDF] Deep Text Classification Can be Fooled | Semantic Scholar PDF] Deep Text Classification Can be Fooled | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ca062c5e48d230d0ac51da96d492f3cb4bf82b39/5-Table4-1.png)

![PDF] Deep Text Classification Can be Fooled | Semantic Scholar PDF] Deep Text Classification Can be Fooled | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ca062c5e48d230d0ac51da96d492f3cb4bf82b39/4-Table2-1.png)

![PDF] Deep Text Classification Can be Fooled | Semantic Scholar PDF] Deep Text Classification Can be Fooled | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ca062c5e48d230d0ac51da96d492f3cb4bf82b39/2-Table1-1.png)

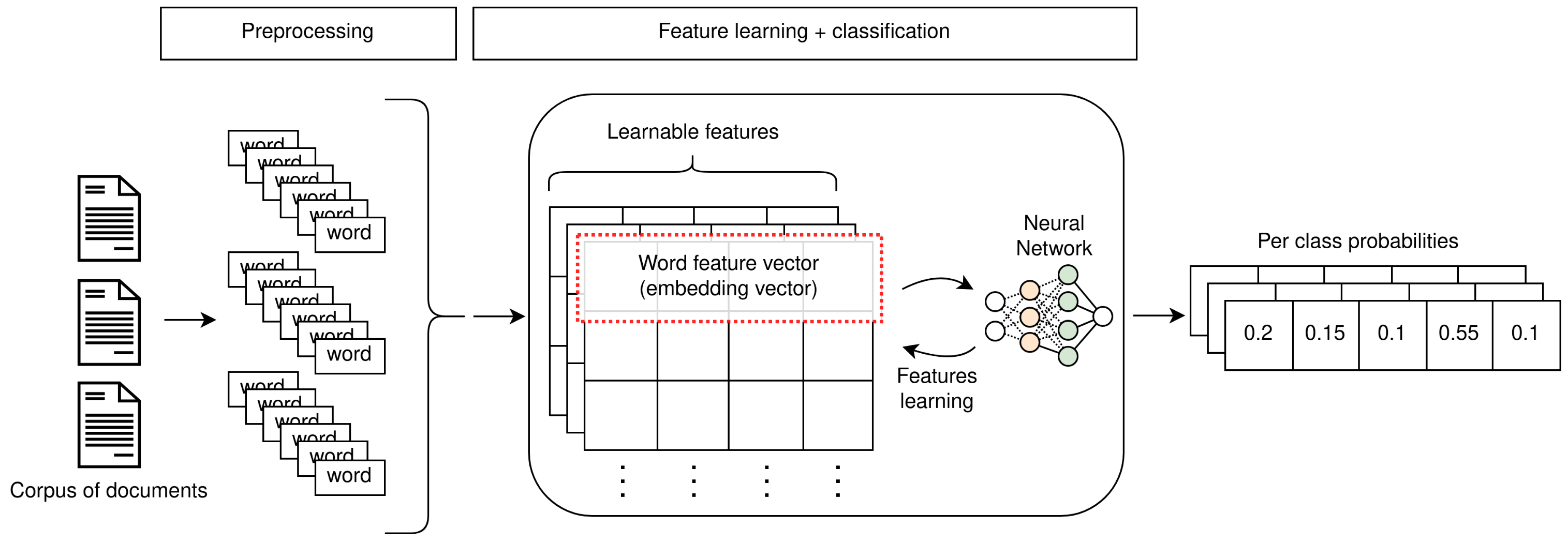

![Natural Language Processing (NLP) [A Complete Guide] Natural Language Processing (NLP) [A Complete Guide]](https://wordpress.deeplearning.ai/wp-content/uploads/2022/10/09.-CNN-BasedTextClassification_Captioned-1024x577.png)

![PDF] Deep Text Classification Can be Fooled | Semantic Scholar PDF] Deep Text Classification Can be Fooled | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ca062c5e48d230d0ac51da96d492f3cb4bf82b39/2-Figure1-1.png)

![PDF] Deep Text Classification Can be Fooled | Semantic Scholar PDF] Deep Text Classification Can be Fooled | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ca062c5e48d230d0ac51da96d492f3cb4bf82b39/6-Table5-1.png)

![R] A simple explanation of Reinforcement Learning from Human Feedback (RLHF) : r/MachineLearning R] A simple explanation of Reinforcement Learning from Human Feedback (RLHF) : r/MachineLearning](https://preview.redd.it/r-a-simple-explanation-of-reinforcement-learning-from-human-v0-fp5mh1sdayca1.png?width=2324&format=png&auto=webp&s=2d3955630e9394e5c4ba2716a4fc08e7ef4c8d1a)

![PDF] Deep Text Classification Can be Fooled | Semantic Scholar PDF] Deep Text Classification Can be Fooled | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ca062c5e48d230d0ac51da96d492f3cb4bf82b39/3-Figure3-1.png)